“It was the best of times, it was the worst of times, it was the age of wisdom, it was the age of foolishness, it was the epoch of belief, it was the epoch of incredulity, it was the season of light, it was the season of darkness…” these are the words of the famed author Charles Dickens in his book, A Tale of Two Cities. This passage beautifully juxtaposes human nature’s and history’s contradictions, paradoxes, and duality.

A juxtaposition that applies just as well to the domains of AI and cybersecurity today – it is the best of times, it is the worst of times, it is the age of innovation, it is the age of vulnerability, it is the epoch of protection, it is the epoch of exploits, a season of digital guardians and a season of digital adversaries. Drawing inspiration from Dickens, these words reflect the contrasting and intertwined nature of AI and cybersecurity in our modern, digital world. Worldwide, the adoption of AI in 2023 has skyrocketed. Individuals, businesses, and governments have integrated AI into their processes and operations, from basic functions such as social media and email communications to more mature applications in chatbots and virtual assistants, automation, product development and engineering, finance and investment and security. These applications demonstrate this technology’s growing confidence, speed and spread. Forbes estimates that AI is expected to see an annual growth rate of 37.3% from 2023 to 2030 and an estimated market size of $407 billion by 2027. While the capabilities and benefits of AI are to be heralded, it does present some disbenefits. For example, millions of workers could be displaced due to advancements and increased adoption. Further, more and more questions are being raised about biases, misinformation, and AI’s ethical use, governance, and regulation.

While the AI revolution offers innovative cyber protection and resilience solutions, it introduces new vulnerabilities and threats, drawing a battle line between those who leverage AI to fortify their security and those who exploit AI for malicious purposes to undermine security. The global indicator, ‘Estimated Cost of Cybercrime’, predicts that the annual cost of cybercrime will reach $8 trillion in 2023 – over 16 times larger than the 2022 GDP of Nigeria ($477.39 B) according to the World Bank’s nation accounts data. These cybercrime losses are forecast to increase by more than 15% yearly to reach $10.4 trillion by 2025. A significant driver of these losses is emerging technologies such as AI, which cyber threat actors are leveraging to increase their attacks’ reach, sophistication, and efficiency, leaving traditional cybersecurity solutions in the dust. In response to this, however, more cybersecurity OEMS are integrating AI technologies to improve identification, protection, detection, response to, and recovery from threats. This article explores both sides of the conversation, presenting a dichotomy of AI as a villain and a hero, highlighting the challenges and risks as well as the benefits and opportunities in our cyber world.

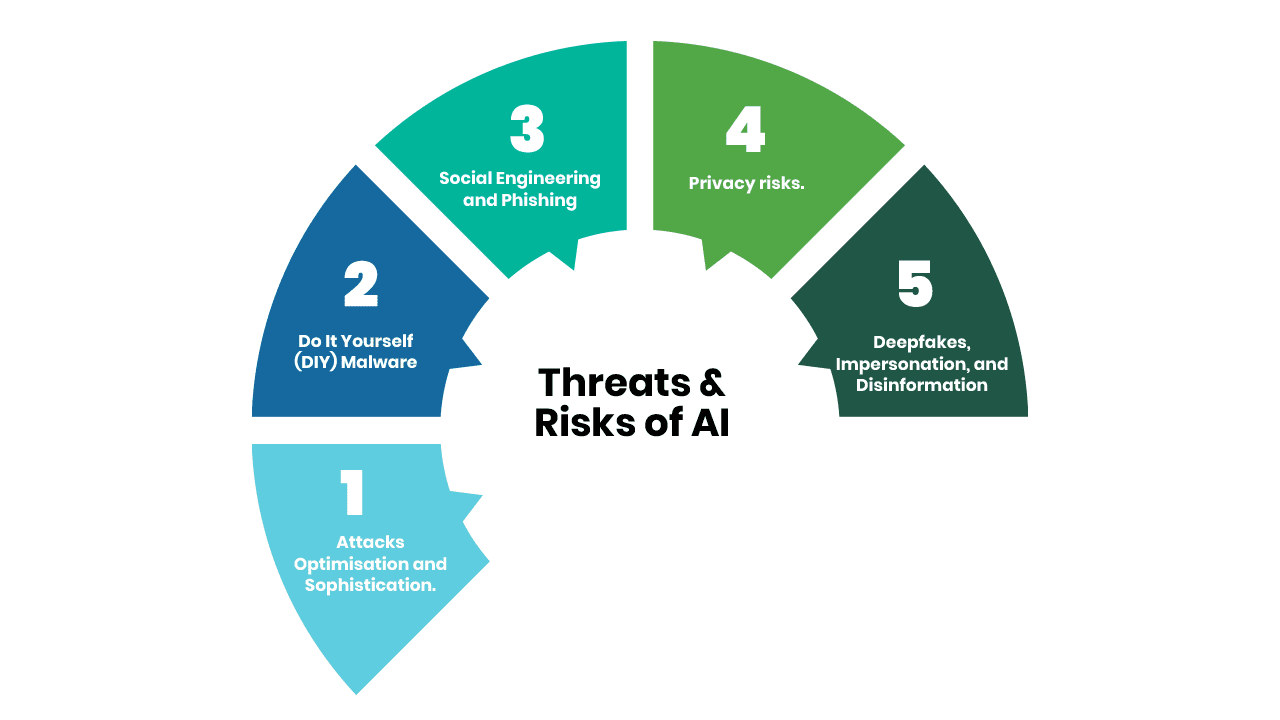

AI, The Villian – Threats & Risks

AI has the potential to make significant contributions to improving cybersecurity. However, it does present certain challenges and risks. Like any tool, AI’s impact can be double-edged – serving both positive and negative purposes. Among the foremost threats and risks are:

1. Attacks Optimisation and Sophistication:

AI has fundamentally changed the cyber threat landscape by simplifying and enabling attacks with unprecedented speed. Attackers can employ AI to control massive botnets for simultaneous DDoS attacks, orchestrate phishing campaigns, and rapidly crack passwords. Moreover, the polymorphic nature of AI-enabled threats allows them to evolve and constantly mutate, rendering known detection patterns ineffective. The speed at which these attacks can be executed makes the threat exponentially worse, not only reducing the incident response time for organisations but also leading to the presence of state-sponsored advanced persistent threats (APT) within networks and systems, as evident by an escalation of cyber activity for espionage, warfare, and disinformation in service of political, economic, and territorial ambitions by nation-states as reported in the June 2023 Trellix CyberThreat Report. In addition, cybercriminals are exploiting Large Language Models (LLM) to develop tools crafted explicitly for malicious purposes to optimise their attacks. The Netenrich threat research team, in July 2023, discovered a tool being advertised and sold on both the dark web and Telegram, known as FraudGPT — ChatGPT’s evil twin. Notably, FraudGPT lacks the typical constraints associated with ChatGPT, making it an ideal tool for hackers to write malicious code, identify security vulnerabilities, develop phishing/scam websites, and locate sites susceptible to carding, among other illicit activities.

2. Do It Yourself (DIY) Malware:

The advent of text-based generative AI, exemplified by tools like ChatGPT, epitomises a double-edged sword in cybersecurity. While these AI systems can generate code, aiding in various programming tasks, they also open the door to misuse. Skilled individuals with malevolent motives can manipulate these tools to craft malicious code like ransomware, keyloggers, and privilege escalation scripts without needing expert-level programming skills, resulting in an alarming surge in the volume and range of malware. This shift poses multifaceted challenges to cybersecurity, including a more diverse malware landscape, reduced technical barriers for potential threat actors, and difficulties in detection. The threats posed by DIY malware are real. In March 2023, researchers at HYAS Labs demonstrated the capabilities of AI-powered malware by creating a proof-of-concept polymorphic malware named BlackMamba with the assistance of ChatGPT. The BlackMamba is a type of keylogger that employs artificial intelligence to modify its actions each time it runs, allowing it to capture keystrokes and send the collected data through an MS Teams webhook to a malicious Teams channel. BlackMamba underwent numerous tests against a top-tier EDR (Endpoint Detection and Response) solution, and it consistently went unnoticed, with zero alerts or detections.

3. Social Engineering and Phishing:

Social engineering is a manipulation technique used by malicious actors to deceive individuals, often relying on psychological tactics, into divulging sensitive information or providing access to secure systems – basically, “hacking the individual”. Phishing is a specific type of social engineering attack that involves using deceptive emails, messages, or websites that appear to be from legitimate sources to trick individuals into revealing confidential information, such as passwords, credit card details, or personally identifiable information. Since the beginning of 2019, the number of phishing attacks has grown by more than 150% per year and in 2022, the Anti-Phishing Working Group (APWG) logged more than 4.7 million attacks – a record year. Unfortunately, it is about to get worse. AI’s advancements have ushered in a new era of phishing and social engineering attacks, challenging our ability to distinguish between genuine and malicious communication. The days of typos, misspellings, and grammatical errors as indicators of phishing emails are largely gone, replaced by AI’s accuracy and precision in crafting convincing messages. AI can create attack strategies tailored to specific organisations by collecting and analysing data from various sources. For instance, AI can analyse social media posts, employee profiles, or leaked information to create convincing spear-phishing or whaling emails. This personalised approach exploits individual interests and relationships, making identifying these AI-enabled phishing attempts challenging.

3. Privacy risks:

Today, we generate and share data more than ever in human history, and safeguarding our privacy has become a complex challenge. This is especially true in AI, where data is not just a part of the ‘lifeblood’ of these systems. Our online activities create a vast pool of data; simple actions like liking a photo on Instagram or Facebook leave a trace of our digital presence. The real concern arises as AI systems grow more sophisticated, enabling them to use this data to make decisions about our lives without us realising it – imagine a more advanced version of the targeted ads we receive based on our browsing patterns. In addition, AI’s improvement heavily relies on our interactions with it. This interaction often involves Reinforcement Learning from Human Feedback (RLHF), an approach that fine-tunes and improves generative AI systems like ChatGPT and Bard based on human input and feedback – the more data we feed these models, the better they become and as we continuously interact with them and realise their power, the more we might be tempted to cross boundaries and share sensitive data which increases the risk of our data falling into the wrong hands due to security vulnerabilities, potentially resulting in unwanted data disclosures like that experienced by OpenAIin March 2023. Further, as AI becomes increasingly integrated into voice assistants like Siri, Alexa, Bixby and social media platforms like Snapchat and TikTok through chatbots and ‘virtual buddies’, users who are uneducated about the associated risks may perceive these AI bots as trustworthy friends or confidants and unwittingly share sensitive information which increases the chances of data breaches due to an even larger attack surface.

4. Deepfakes, Impersonation, and Disinformation:

Deepfakes – videos, audio, photos, and text created using AI – are increasingly being used to impersonate high-profile individuals, corporate executives, and target organisations, blurring the line between authenticity and deception to an unprecedented degree. The manipulation of media content has opened new avenues for cybercriminals to deceive individuals and businesses, resulting in disinformation, fraudulent activities, and damage to reputations. Deepfake technology could be considered a “weapon of mass deception, ” and cybercriminals have successfully exploited this technology for financial gain.

In a 2020 study conducted by the University College London, deepfakes were identified as the most serious AI-related threat to cybersecurity. The era of deepfakes has introduced fresh risks to businesses, with one of the most prominent being the Business Identity Compromise (BIC). BIC involves using deepfake technology to fabricate fake corporate personas or mimic existing employees, often assuming the identity of well-known and high-ranking personnel within the organisation. In early 2020, cybercriminals cloned the voice of a company director in the United Arab Emirates (U.A.E.) and persuaded a Hong Kong bank manager to transfer a staggering $35 million. This incident represents the largest publicly disclosed loss attributed to fraudulent content created by deepfake technology.

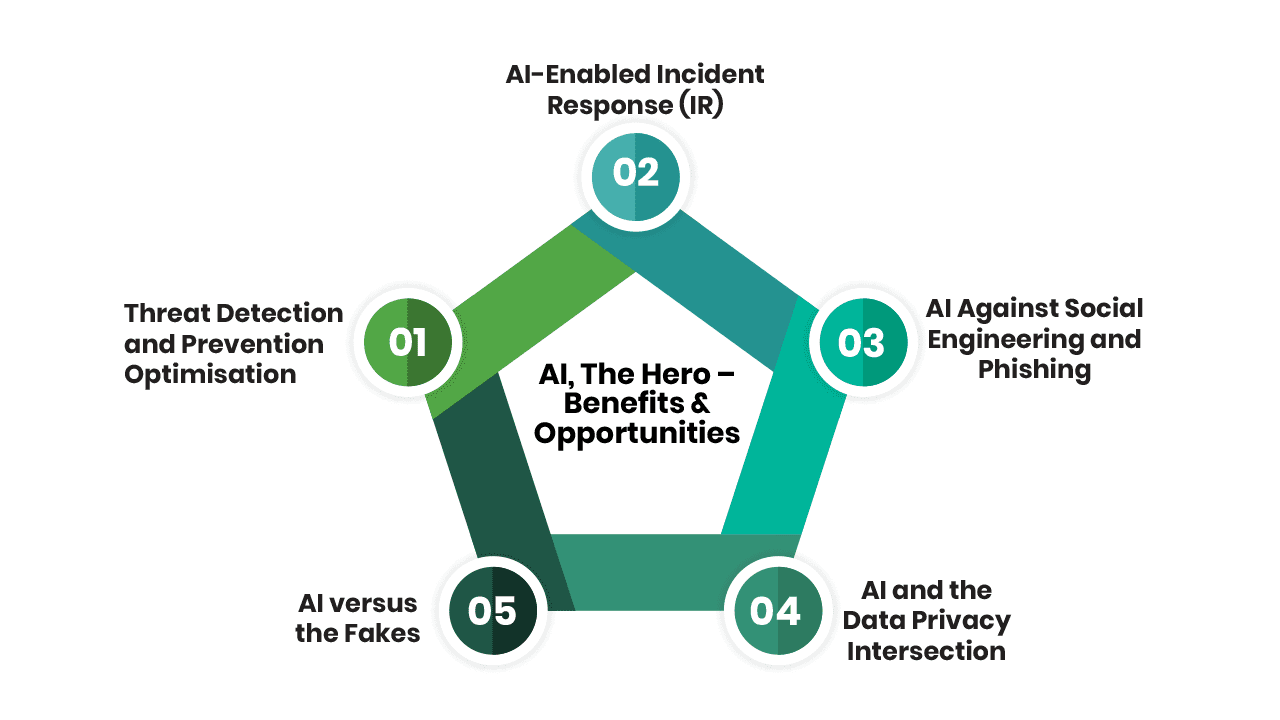

AI, The Hero – Benefits & Opportunities

While we’ve delved into the pertinent cyber threats and risks associated with AI’s misuse, shifting our gaze toward the other dynamic within this narrative is equally crucial.

Here, AI emerges as a formidable ally, fortifying our digital worlds and ensuring their protection.

1. Threat Detection and Prevention Optimisation:

The landscape of cyber threats is constantly in flux, continuing to evolve at great speed and scale, posing challenges for human threat analysts and traditional threat detection and prevention systems. On the other hand, AI-enabled threat detection and prevention solutions harness the power of machine learning and cognitive algorithms to establish baseline patterns of normal activity within a network or system, enabling them to spot anomalies, indicators of compromise, or mutated forms of malware designed to evade detection. Cyble Inc., a threat intelligence provider, estimates that in the next half-decade, the threat intelligence industry is positioned to turn into a high-speed, machine-driven operation powered by AI, enabling organisations to enhance the accuracy and precision of their cyber threat detection and prevention while facilitating real-time responses to not only known threats but also zero-day threats. As we learned in the previous section, hackers can use AI, too, and as they increasingly leverage AI to refine attack strategies, AI-enabled threat detection and prevention solutions are a pivotal countermeasure. They engage in a strategic AI-versus-AI battle, with AI-driven defences working to identify and thwart attacks that are not only optimised but also characterised by their sophistication.

2. AI-Enabled Incident Response (IR):

Given that no information security control is foolproof and there is always an inherent element of risk, incident response is crucial to any organisation’s information security program. However, incident response teams often grapple with complex challenges, primarily from diverse IT systems generating substantial data, alerts, and logs leading to burnout – experienced by more than 70% of SOC analysts per a Tines AI emerges as a transformative solution, streamlining and enhancing the IR process. AI enables the detection and analysis phases by employing advanced algorithms and models to autonomously learn from data, identify patterns, and categorise incidents. This capability proves invaluable in filtering out false positives, correlating and analysing data from disparate sources, spotting anomalies, and recognising indicators of compromise. AI also aids in pinpointing the origin, scope, impact, and prioritisation of incidents. AI enables the containment, response, and recovery phases through dynamic decision-making, allowing for the quick execution of appropriate containment and remediation actions with minimal to no human intervention. By continuously learning from previous incidents, AI can leverage these insights to enhance incident response plans, playbooks, and underlying algorithms and models. Recent findings, such as those outlined in the 2023 IBM report, underscore the tangible benefits of integrating AI into incident response processes. Organisations that extensively utilise AI and automation in their incident response processes experienced a significant reduction in breach lifecycle, shortening it by 108 days compared to organisations without these capabilities (214 days versus 322 days). This trend underscores the growing importance of AI in fortifying defences against cyberattacks as technology continues to evolve.

3. AI Against Social Engineering and Phishing:

The widely acknowledged belief in the information security domain is that people are the weakest link in the security chain. The bad guys know this too, which is why 90% of all cyber-attacks commence with a social engineering exploit, as reported by the Cybersecurity and Infrastructure Security Agency(CISA). In addition to taking a defence-in-depth approach to the implementation of security controls and continuous monitoring and reviews, one of the best ways to strengthen an organisation’s security posture is to educate employees on current trends, prevalent attack methods, and effective defence techniques. Unfortunately, traditional security awareness training often falls short due to its ineffectiveness, outdated content, or boring delivery. Leveraging AI, employee awareness initiatives can be revolutionised by creating engaging, personalised, and adaptive programs that simulate modern, real-world scenarios. These programs improve employees’ competence and provide valuable feedback and tailored recommendations, promoting continuous improvement. Additionally, AI is bringing several advancements to the field of email security through enhanced accuracy, real-time dynamic analysis, and behavioural insights to improve and augment the monitoring of email sources, content, context, and metadata to facilitate identifying and thwarting potential phishing threats. Incorporating AI into cyber awareness and education of employees is critical to strengthening an organisation’s information security posture today. After all, you are only as strong as your weakest link.

4. AI and the Data Privacy Intersection:

Regardless of the model, algorithm, or application, all AI tools share a fundamental characteristic: their reliance on data. At its core, AI heavily depends on various data types, including personal information, for training and continuous improvement. This data-centric nature of AI subjects it to the purview of privacy laws and regulations like the Nigeria Data Protection Act and the EU General Data Protection Regulation (GDPR). The primary concern related to privacy in AI revolves around the potential for data breaches and unauthorised access to personal data. With the extensive collection and processing of data, there exists a significant risk that this data could fall into the wrong hands, whether through hacking or other security vulnerabilities. Still, AI can aid in safeguarding Personally Identifiable Information (PII) by augmenting already-existing techniques such as anonymisation and de-identification to automatically remove or obscure PII from datasets, making tracing information back to individuals considerably more challenging. Another application of AI is in privacy-enhanced browsing tools that block tracking cookies, analyse website privacy policies and warn users about potentially invasive data collection and processing practices.

Further, AI can be used in data masking to generate synthetic or pseudonymous data for testing and development purposes, thereby reducing the exposure of sensitive data in non-production environments. In most workplaces, generative AI has gained substantial traction for its productivity benefits. According to a study by Writer, a renowned generative AI platform for enterprises, 56% of surveyed business leaders (directors and above) affirmed that generative AI enhances productivity by at least 50%. However, this increased adoption is not without risks. The same study disclosed that 46% of business executives believed employees had shared private and corporate information with ChatGPT.

Consequently, it is no surprise that some organisations have taken the draconian approach of banning AI altogether, with ChatGPT being the most banned AI tool, at 32%. A more forward-thinking and innovative strategy involves the establishment of AI Centers of Excellence (COE) within organisations, comprising a diverse group of stakeholders, for governance and control. Such centres are pivotal in formulating relevant AI policies, implementing training and awareness programs, and setting standards aligned with best practices for information security and data privacy. This approach allows organisations to harness the benefits of AI while mitigating the associated risks comprehensively and sustainably.

5. AI versus the Fakes:

Distinguishing fact from fiction has become increasingly challenging in our modern world. The rise of well-crafted audio and visual deepfakes has made it difficult to discern what is true and what is not. On the contrary, AI can step in to help identify deepfakes that are imperceptible to the human eye, provided it has access to sufficient data. Deepfakes are created using a model known as Generative Adversarial Networks (GANs), comprising two sub-models: the generator and the discriminator. The generator creates data samples (synthesised/fake data), such as images, that closely resemble the training dataset. At the same time, the discriminator acts as an evaluator, distinguishing between real (from the training dataset) and fake (the generated data). This process constitutes a constant battle, hence the “adversarial” nature, wherein as the generator improves, the discriminator must enhance its ability to differentiate between real and fake data. A competition that continues until the generator produces data virtually indistinguishable from the training data, rendering the discriminator unable to distinguish between the two reliably. Efforts to improve detection capabilities primarily focus on developing increasingly proficient discriminators to identify deepfake content. An AI and Deepfakes article published by RAND Corporation in 2022, a global policy think-tank and research institute, showed that government agencies and organisations, such as the Defense Advanced Research Projects Agency (DARPA) and Facebook, have substantially invested in detection technologies. DARPA, for instance, allocated over $43 million to its Semantic Forensics (SemaFor) program between 2021 and 2022, while Facebook hosted a deepfake challenge competition in 2020 featuring over 2000 participants. A significant challenge—and paradox—associated with AI lies in its capacity for self-learning and adaptation. What has been witnessed is essentially an arms race. As discriminators and detection technologies improve, these advancements are immediately absorbed into creating new, even more difficult-to-detect deepfake content. However, all is not lost. New ideas and initiatives are emerging to tip the scales in favour of detection algorithms. These include accessing collections of images, including synthetic media (fakes), on social media platforms to improve training and detection, developing “radioactive” training methods that can effectively “fingerprint” altered media, making them easier for detectors to spot, and creating solutions that track the origin and history of images, establishing a chain of custody from creation to alteration or deletion. To combat emerging threats like Business Identity Compromise (BIC), organisations need to ensure effective and robust information security programs, including adopting a layered approach to security, leveraging threat intelligence, implementing adequate segregation of duties, and adhering to best practices in internal controls, such as call-back verification and well-defined procedures for high-value transactions, amongst others.

In the ever-evolving landscape of AI and cybersecurity, we find ourselves at the intersection of great promise and formidable challenges. Like the contrasting forces described in Dickens’ “A Tale of Two Cities,” the AI revolution in cybersecurity today can be both the best and worst times. The relentless advance of AI technology has ushered in an era where cyber threats have grown in scale, sophistication, and speed, raising the stakes for individuals and organisations worldwide. However, AI also stands as a beacon of hope, offering innovative solutions to fortify our digital defences, detect and respond to threats, safeguard data privacy, and combat the rising tide of disinformation. At pcl., we understand the delicate balance between AI’s potential to undermine cybersecurity and its power to strengthen it. Our team of experts possesses the knowledge, experience, and dedication needed to navigate this complex landscape. We empower organisations to safely integrate AI into their daily processes and operations, implementing AI-powered solutions for increased security, enhanced employee training and heightened awareness. With our guidance, your organisation can harness the transformative capabilities of AI while mitigating the associated risks. Together, we can turn the tale of two forces into a story of resilience, innovation, and security. Contact us today to embark on this transformative journey.

Written by:

Ikenna Ndukwe

Consultant