Cybercrimes and cyberthreats have long been a thorn in the flesh of corporate organisations and governments, with cybercriminals becoming increasingly innovative in exploiting vulnerabilities. Before the widespread adoption of AI and LLMs across industries in 2022, the most sophisticated and prevalent threats included phishing, ransomware, malware, Zero-Day exploits, Distributed Denial-of-Service (DDoS) attacks, Man-in-the-Middle (MitM) attacks, and SQL injection.

These threats were already perilous and capable of grinding organisations to a halt. For example, in March 2023, Flutterwave, Africa’s biggest fintech unicorn, woke up to a $6.3 million missing nightmare, unauthorised transactions, and a breach that shook Nigeria’s tech industry. Two years prior, in 2021, in another part of the world, several time zones away and in a different sector, Colonial Pipeline, the largest refined oil products pipeline in the United States, was hit by a ransomware attack. During their intrusion, the attackers stole approximately 100 gigabytes of data in two hours.

While these threats are still relevant, they have been overshadowed by the rise of AI-powered cybercrime, which operates on a much larger and more sophisticated scale. Since AI’s massive development and adoption in 2022, the aforementioned cyber threats have “Powered Up” and morphed into even more refined versions. Also, newer and more severe cyber threats have emerged.

This article explores the transformative role of AI in cybersecurity, emphasising the urgency of adopting AI-driven defences and strengthening cybersecurity training to counter evolving threats and safeguard national security. It examines how AI has reshaped the threat landscape, amplifying traditional risks such as phishing and ransomware while also introducing new dangers, including deepfake fraud, automated spear-phishing, and AI-enhanced malware.

Cybercrime has evolved from crude scams into sophisticated, AI-powered operations

Today, hackers have moved beyond ordinary phishing emails, crafting personalised, AI-generated messages that sound exactly like your boss. They break passwords in seconds using AI tools like PassGAN, and their scams now go far beyond fake emails, employing deepfake videos and cloned voices to impersonate CEOs, government officials, and even family members.

An example of this is the Hong Kong deepfake case, where $25 million in loss was incurred after a staff member had a video call with a deepfake image of the CFO. This wasn’t a one-off occurrence. In 2023, fraudsters cloned the voice of a UK-based company’s CEO in a phone call, convincing a manager to transfer $240,000.

Logically, the avenue for this sort of attack will only increase with amazing advancements in AI-generated images and videos like Google VEO3, which are almost indistinguishable from real videos.

Furthermore, malware is no longer static. Hackers use AI to create polymorphic malware, which can change its own code to avoid detection by antivirus software. Black-market AI tools like WormGPT and FraudGPT are openly sold on dark web forums. These tools automate phishing, write malware, and help criminals bypass firewalls (More examples will not be provided, so as not to empower the wrong audience).

Unfortunately, these attacks will only become more dangerous, and their impact will be increasingly costly with the advancement of technology. This might be because our lives are moving increasingly online. This phenomenon will invariably expand the attack surface. As more companies opt for remote work, more cloud storage will be required, which means more endpoints can be targeted for attack.

Furthermore, vulnerabilities result from the development and adoption of Internet of Things (IoT) Technology. Everyday items, such as coffee makers, medical equipment like pacemakers or Insulin Pumps, and even cars, are now connected to the Internet and are being exploited.

One could only imagine the severity of having one’s pacemaker hijacked by persons with malicious intent. For all the aforementioned reasons, the fight against these attacks should be taken very seriously. Thankfully, cybersecurity experts are also trying to stay ahead of threats by leveraging AI-driven tools to detect threats and protect digital assets.

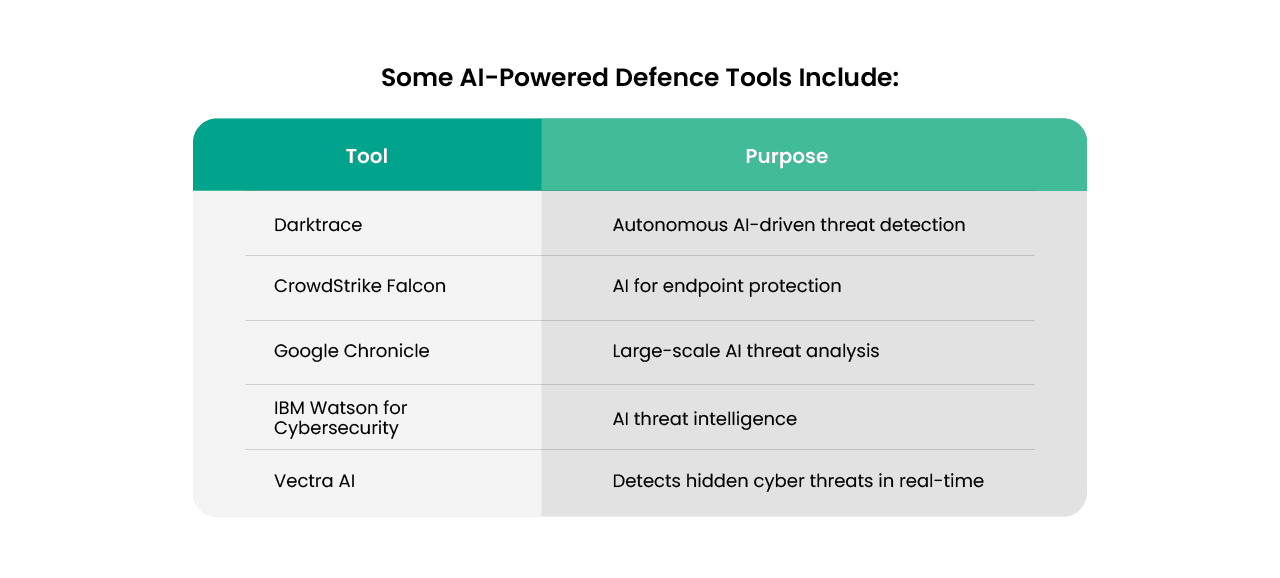

The table below provides a list of tools and their uses:

Similarly, penetration testing tools like ImmuniWeb and Metasploit leverage AI to identify vulnerabilities before hackers can exploit them. The availability of these tools does not mean we are totally out of the woods, as the lack of skilled cybersecurity professionals is a problem almost as big as cyber threats.

Globally, there are over 3.5 million unfilled cybersecurity jobs. In Africa, the shortage is even more severe. This means more people need to step into this field if we are to win against threats. Organisations also need to upskill security teams in AI tools and ethical hacking.

- Cross-functional training for legal, compliance, and management teams.

- Employee awareness to prevent human error—still the weakest link in cybersecurity.

Companies and governments must prioritise upskilling their teams, not just their tech. Security professionals need to learn how to leverage AI for defence, and every employee, regardless of role, needs to be trained to spot potential threats. After all, human error remains the most exploited vulnerability in cybersecurity. Many of the worst breaches happen not because of system flaws, but because someone clicked on a malicious link or trusted a fake voice on the phone.

A 5-Step AI-Cybersecurity Playbook for Organisations

As cyber threats become more complex with the integration of AI, organisations can no longer rely on ad-hoc defences. A structured framework is required to anticipate risks, strengthen resilience, and ensure readiness.

The following five-step playbook provides a practical guide for organisations to protect critical assets, upskill teams, and align governance structures with the realities of AI-powered cybercrime:

1. Assess Your Digital Exposure

-

- Conduct an AI-enabled vulnerability scan across systems, networks, and cloud services to map out your organisation’s attack surface.

- Prioritise critical assets like financial systems, health records, or government databases.

2. Adopt AI-Driven Defence Tools

-

- Deploy advanced AI solutions for real-time detection and automated response.

- Match tools to needs: endpoint protection for finance, anomaly detection for healthcare, and threat analytics for government.

3. Establish an Incident Readiness Framework

-

- Simulate AI-powered attacks such as deepfake fraud or automated phishing.

- Run tabletop exercises so executives and employees know exactly how to respond under pressure.

4. Upskill and Certify Security Teams

-

- Enrol IT teams in AI-focused cybersecurity certifications.

- Build cross-functional awareness by training non-technical staff (legal, HR, operations) on AI-enabled scams.

5. Strengthen Governance and Partnerships

-

- Set clear policies on AI use, data security, and third-party vendor risk.

- Collaborate with cybersecurity firms, regulators, and industry peers to share intelligence and strengthen collective defences.

Conclusion

The battle between AI-driven crime and AI-driven defence is just beginning. Cyber threats will continue to evolve, but businesses, governments, and individuals can still stay protected if they take proactive steps to do so. The challenge isn’t about whether AI will change cybersecurity; it has already done so. The real question is whether you will harness AI to defend your systems, or whether cybercriminals will keep using it against you. The digital future belongs to those who are prepared. Will you be one of them?

At Phillips Consulting, we stand at the forefront of enabling this transformation. We provide tailored advisory, training, and implementation support to help organisations close the AI-cybersecurity skills gap. Recommended certifications and training include the Certified Ethical Hacker (CEH), the Certified Information Systems Security Professional (CISSP), AI for Cybersecurity programs, CompTIA Security+, and the Offensive Security Certified Professional (OSCP). These programmes empower cybersecurity teams to stay ahead of evolving threats and ensure they can proactively deploy AI tools for defence, rather than react to attacks after the fact. For more information on how to access these trainings, contact Technology@phillipsconsulting.net.

Written by:

Oluwagbenga Pereira

Senior Analyst